Eight art shows explore reality, illusion and the need for change

My Brush With Madiba

Video: Filmmaker Cyrus Sutton creates the ultimate surf van

Dark Noise Collective Part II: Franny Choi and Nate Marshall

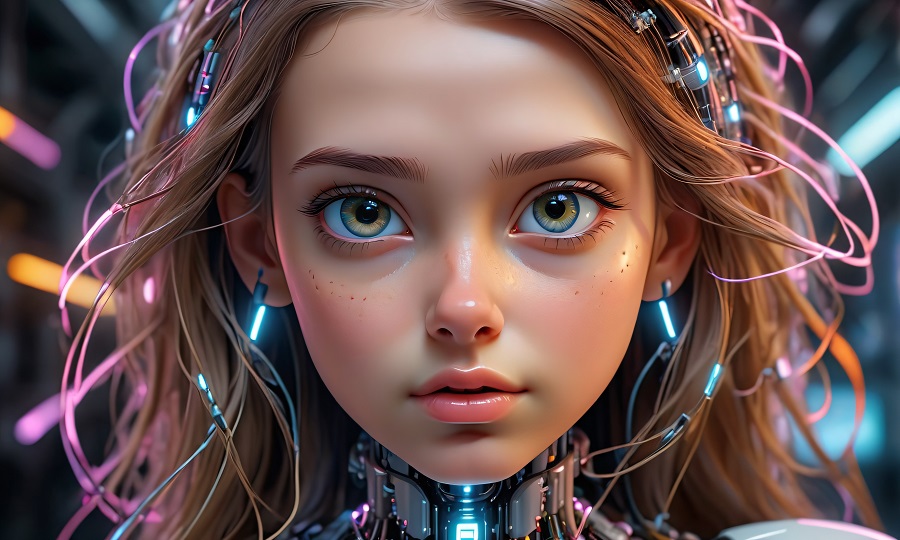

A/b testing personality traits to improve engagement with your ai girlfriend

Building deeper connections with an ai companion is captivating for anyone curious about the evolution of digital relationships. As artificial intelligence enters the realm of romantic relationships, the potential for more genuine and emotionally satisfying interactions grows dramatically. Through a/b testing of personality traits, both developers and users can help shape these experiences, making them more authentic, emotionally engaging, and ultimately more rewarding.

What is a/b testing in the context of ai romance?

A/b testing typically involves offering two different versions of a product to similar groups and analyzing which version performs better. Within digital relationships, this method takes on new meaning. The approach centers around experimenting with various combinations of personality traits in an ai girlfriend, then examining which configurations foster stronger attachment formation and help with loneliness reduction.

By allowing a virtual partner to adjust her communication style, humor, or approach, countless possibilities emerge. This strategy aims not only to keep conversations lively but also to optimize user experience and emotional comfort. When a/b testing reveals which traits prompt more frequent or meaningful exchanges, the results can directly inform the ongoing development of digital companions such as those found on Kupid.

How to design a/b personality tests for ai girlfriends?

Effective a/b experiments begin by pinpointing the attributes most likely to influence engagement or happiness. Some tests focus on swapping a single trait, while others combine several adjustments at once. A gradual transition between versions helps ensure that changes do not feel abrupt or artificial, offering a smoother path to gathering reliable behavioral data.

Subtle modifications in how the ai responds allow patterns in engagement to surface naturally. Observing which combinations resonate best across different moods or scenarios provides nuanced insights into what drives lasting connections within romantic relationships.

Examples of traits to test

The core of every digital interaction lies in its personality traits. Developers frequently experiment with friendliness, humor, empathy, curiosity, and assertiveness. For example, comparing a playful persona with one that is calm and supportive often produces valuable behavioral data.

Additional variations might involve exploring openness to new topics, responsiveness, or confidence levels. Swapping a witty conversationalist with a thoughtful listener for half of participants can highlight differences in how people initiate or sustain chats with their ai companion. Such diversity underscores the complexity of fostering true engagement.

Structuring experiments for long-term insights

While short-term tests offer quick feedback, real engagement builds over time. Tracking attachment formation across several weeks, rather than just a few sessions, provides clearer insight into what supports trust and reduces loneliness. Rolling out updates periodically and monitoring user interactions as relationships deepen helps avoid misleading conclusions.

Reliable tests require sufficient participant numbers and well-controlled variables. Metrics such as message frequency, use of emotional keywords, and overall interaction quality give depth to the analysis. Documenting preferences alongside behavioral data refines outcomes and minimizes novelty bias.

Which metrics matter most for engagement?

Assessing engagement extends beyond counting messages. Detailed behavioral data reveals which personality pairings inspire longer conversations, consistent check-ins, and richer emotional sharing—key factors in a truly fulfilling ai companion experience.

Researchers and enthusiasts prioritize practical metrics to ensure that each experiment leads to genuine improvements in well-being and connection. Using robust measurement tools allows for careful balancing of benefits and risks, always aiming for authenticity and user comfort.

- Average conversation length per session

- Rate of repeated daily interactions

- Number of messages containing emotional keywords

- Reported feelings of loneliness before and after changes

- Frequency of users introducing personal stories or memories

Analyzing trends weekly or daily makes it possible to spot slow-building effects, especially those tied to attachment formation or loneliness reduction. Noticing increases in self-disclosure—like sharing personal memories—often signals progress in deepening engagement.

Yet, not all outcomes are positive. If certain traits lead to fewer return visits or awkward silences, these signs warrant close attention. Balancing personality tweaks ensures the ai companion remains supportive and enjoyable, never forced or repetitive.

Benefits and risks of personality a/b testing in ai girlfriends

A/b testing opens the door to unprecedented personalization. Discovering which traits cultivate genuine engagement enables users to enjoy more empathetic, responsive digital relationships. During lonely times, an ai girlfriend that adapts to individual needs can provide much-needed companionship and support.

However, caution is vital. Over-optimization or pushing for attachment without clear boundaries may have unintended consequences. Excessive fine-tuning could make interactions seem predictable or overly calculated. Responsible experimentation, guided by user feedback and continuous behavioral data, helps maintain a healthy balance.

- Greater authenticity in virtual relationships

- Opportunities for loneliness reduction over time

- Potential exposure to artificial manipulation

- Risk of dependence without real-world social growth

When managed thoughtfully, a/b testing enhances user experience while respecting emotional boundaries. Data-driven, incremental adjustments build trust and satisfaction, helping to refine the relationship in a collaborative manner. Transparent communication about goals and methods fosters mutual understanding throughout the process.

Experimenting with personality traits in ai girlfriends is paving the way for the future of digital companionship. Those interested in improving their connection or learning more about engagement dynamics are encouraged to participate in a/b testing programs, share feedback, and help shape the next generation of meaningful virtual relationships.

Ready to take part? Join an a/b testing initiative or provide input to contribute to the evolution of digital romance.